Everything Wrong With AVMs in Two Pictures

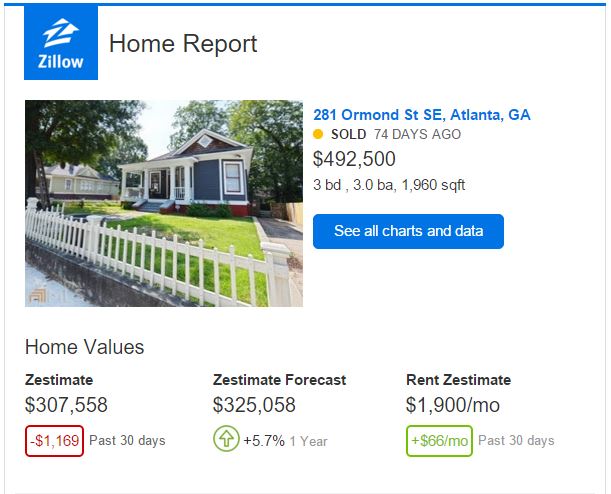

My company, Virgent Realty, sold a home in Atlanta’s hot Grant Park neighborhood just under three months ago for $492,500. The home had numerous showings its first weekend, and went under contract 5 days after we put it on the market. This morning, I was greeted with this email from Zillow:

Zestimate for 281 Ormond Street in Grant Park

Note the $184,942 difference between the most recent sold value and the current Zestimate. After having a good laugh, I decided to give the new Redfin AVM a shot, since they claim to have the lowest error rate of any AVM. Here’s what they had:

Closer, but no cigar – still a $50,000 difference between the actual value and the AVM estimate.

As I mentioned in my last post on the issues with Redfin’s new AVM, errors like this happen when too much data science and not enough business sense goes into the development of a tool. In a market economy, the value of a house is whatever someone is willing to pay for it. As such, an AVM shouldn’t have to do much work for a newly sold house – the estimated value should just be the actual value.

But that’s not how AVMs work. They instead focus on finding the “right answer” based on local price per square foot, school districts, and a number of other factors. Unfortunately, as stated above, the “right answer” is actually just whatever a buyer is willing to pay, and not the output of a formula.

What makes this specific example so egregious is that I actually believe both AVMs could easily produce a better result. Part of the reason that this house sold for so much more than the AVM estimates is because the previous owners did a fantastic renovation and turned it into a one-of-a-kind residence. The AVMs have no way of recognizing that through data.

However, once the house sold for the price it did, a good AVM would have recognized that its valuation was off for some reason outside of the data set, and reestablished a baseline value at the most recent sale price.

By ignoring the most recent sales price, both AVMs are working under the assuming that the buyer overpaid for the property, and that the underlying value of the house is still directly tied to a data set, rather than qualitative factors the tool can’t understand.

For Zillow and Redfin, this has an unintended consequence: if you’re the buyer of this Grant Park home and you see that both AVMs estimate you overpaid for the home by between $50,000 and $180,000, how likely are you to trust these companies when it comes time to sell?

Sam DeBord

Posted at 09:57h, 23 DecemberThis is a precise criticism and an easily correctable issue. Algorithm designers need to tailor their models to rely very heavily on a recent sale price. Don’t overthink it. Don’t trust data on other homes over eyeballs on your subject property.

The single biggest factor in valuation error rates is the inability of an algorithm to actually see the property. When a buyer purchases a home, the algorithm gets a “cheat sheet” to how it looks in real life. An appraiser was probably involved in confirming that price as well. Skew your valuations with the majority of the weight going to the most recent sale price, if and when a home has been sold in the past year or two.

Drew Meyers

Posted at 10:04h, 23 DecemberRecent sales prices on specific homes are already the biggest input into the algorithm, as far as I know. Maybe things have changed over the past 5 years though.

Sam DeBord

Posted at 10:11h, 23 DecemberI’m sure they’re included, but I think the illustration above shows that they’re not weighed heavily enough. Being off by 30%+ when the recent sale stat is right next to it says that they’re still discounting the sale price and leaning on the rest of the algorithm too much. There will always be outliers, but this doesn’t look like one.

Charles Williams

Posted at 13:54h, 23 DecemberTotally agree. For what its worth, the avm we use has that property at $508,609.